Build reliable AI agents with

Build better AI agents with

Build reliable AI agents with

The #1 AI engineering platform

to test your AI agents pre- and in production

The #1 AI engineering platform

to test your AI agents

pre- and in production

Traces

Evaluations

Agent Simulations

Prompts

Datasets

Analytics

Collaboration

Traces

Evaluations

Agent Simulations

Prompts

Datasets

Analytics

Collaboration

Traces

Evaluations

Agent Simulations

Prompts

Datasets

Analytics

Collaboration

Join 1000's of AI developers using LangWatch to ship complex AI reliably

Join 1000's of AI developers using LangWatch to ship complex AI reliably

Join 1000's of AI developers using LangWatch to ship complex AI reliably

400k+

400k+

400k+

Monthly installs

500k+

500k+

500k+

Daily evaluations to prevent hallucinations

Saved on Quality

control per week

4k+

4k+

4k+

Github stars

Prototype, evaluate and monitor AI features

Prototype, evaluate and monitor AI features

1

Build

2

Evaluate

3

Deploy

4

Monitor

5

Optimize

Ship Reliable AI

There’s a better way to ship reliable AI

There’s a better way to ship reliable AI

The strongest AI teams don’t rely on guesswork or one-off prompt tweaks. They move faster and with more confidence by building a continuous quality loop around their AI systems.

LangWatch gives your entire team engineers and domain experts alike a single platform to define evaluations, run experiments, test and simulate AI agents, and monitor production behavior.

By closing the loop between development, testing, and real-world usage, LangWatch helps teams ship AI systems with confidence and improve them with every release.

The strongest AI teams don’t rely on guesswork or one-off prompt tweaks. They move faster and with more confidence by building a continuous quality loop around their AI systems.

LangWatch gives your entire team engineers and domain experts alike a single platform to define evaluations, run experiments, test and simulate AI agents, and monitor production behavior.

By closing the loop between development, testing, and real-world usage, LangWatch helps teams ship AI systems with confidence and improve them with every release.

Build

Essential tools to develop agents faster and safer without slowing teams down

Essential tools to develop agents faster and safer without slowing teams down

Prompt & Model Management

Version, compare, and deploy prompt and model changes with full traceability. Roll out experiments safely using feature-flag–style controls, with clear audit trails for every change.

Evaluations

Create and tune custom evals that measure quality specific to your product

LLM Observability

Instantly search and inspect any LLM interaction across environments. Debug failures, investigate incidents, and support audits with complete visibility from development through production.

Test

Clearly measure the impact of every update. Foster a culture of continuous experimentation

Clearly measure the impact of every update. Foster a culture of continuous experimentation

Agent Simulations for complex agentic AI

Run thousands of synthetic conversations across scenarios, languages, and edge cases

Batch Tests & Experiments

Run tests directly from the LangWatch platform or your code. Track the impact of every change across prompts and agent pipelines.

Auto-Evals

Automatically execute your full test suite with LangWatch, covering both pre-release testing and production monitoring.

Optimize

Build a continuous feedback loop that helps you ship AI products users genuinely trust and enjoy.

Human-in-the-loop

Combine evaluations with domain experts and real user feedback to surface issues early and extract clear, actionable insights from live production data.

Data review & labeling

Collaborative workflows for teams to inspect, annotate, and analyze data together—spotting patterns and sharing learnings across engineering, product, and business stakeholders.

Dataset management

Convert production traces into reusable test cases, golden datasets, and benchmarks to power experiments, regressions, and fine-tuning.

Performance optimization with DSPy

Systematically improve prompts, models, and pipelines using structured experimentation and optimization techniques

Seamless integration in your techstack

Works with any LLM app, agent framework, or model

Works with any LLM app, agent framework, or model

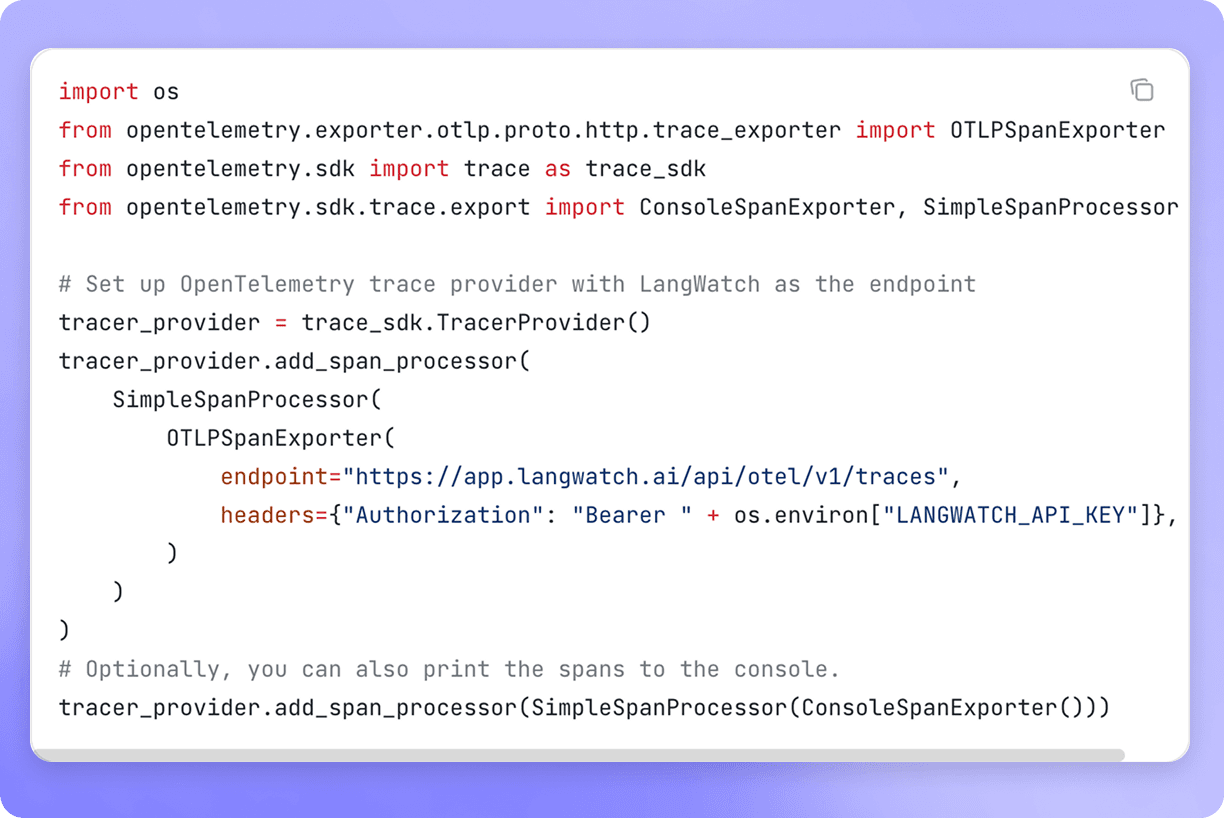

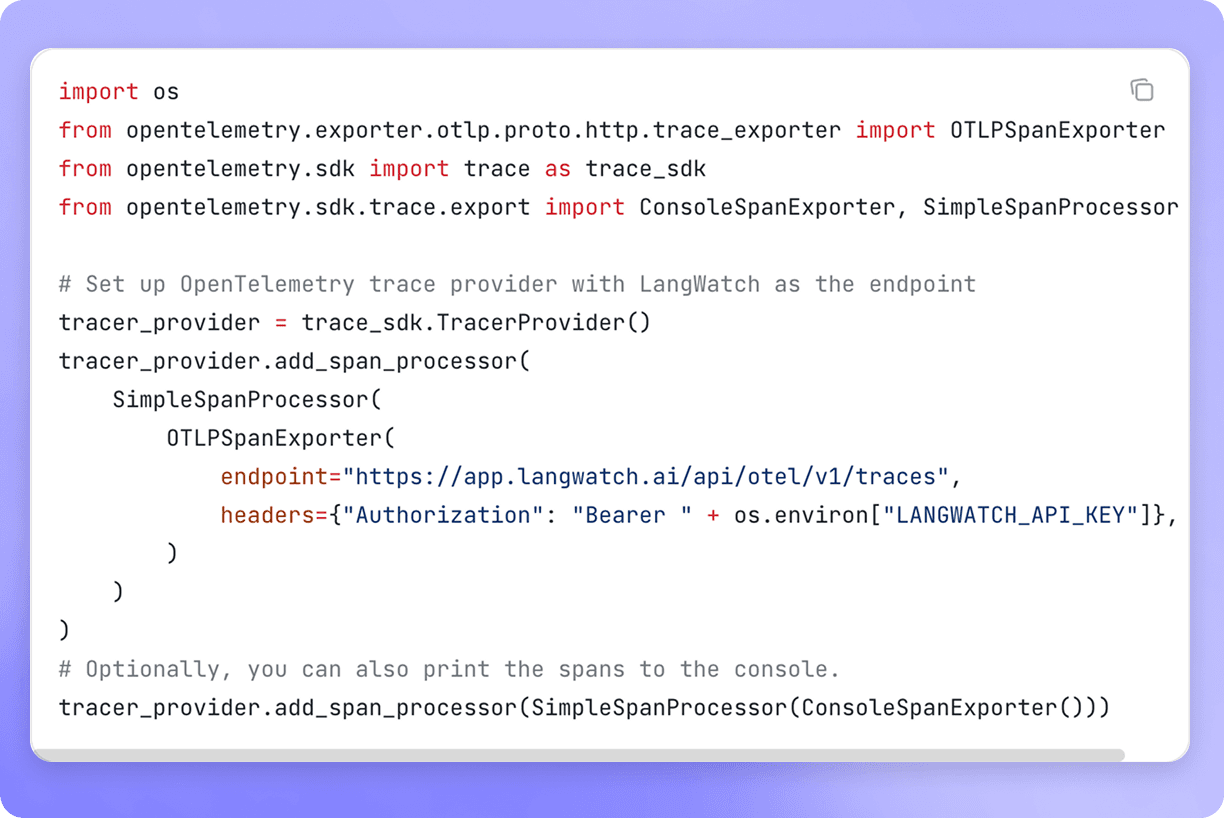

OpenTelemetry native, integrates with all models & AI agent frameworks

Evaluations and Agent Simulations running on your existing testing infra

Fully open-source; run locally or self-host

No data lock-in, export any data you need and interop with the rest of your stack

python

Typescript

uv add langwatch

python

Typescript

uv add langwatch

python

Typescript

uv add langwatch

Collaborate to control reliable AI

Iterate, evaluate, monitor collaboratively

Iterate, evaluate, monitor collaboratively

The systhemetic way of testing that turns experiments into reliable AI

The systhemetic way of testing that turns experiments into reliable AI

Engineer

Access everything in just a few lines of code. Everything in LangWatch works with or without your code. Engineers are able to run prompts, flows, and evaluations programmatically, while non-technical users can use the UI.

Data Scientist

Product Manager

Domain Experts

Engineer

Access everything in just a few lines of code. Everything in LangWatch works with or without your code. Engineers are able to run prompts, flows, and evaluations programmatically, while non-technical users can use the UI.

Data Scientist

Product Manager

Domain Experts

Engineer

Data Scientist

Product Manager

Domain Experts

Empower non-technical team members to contribute to AI quality. Let them easily build evaluations and annotate model outputs, bringing them into the quality testing loop.

Amit Huli

Head of AI - Roojoom

“When I saw LangWatch for the first time, it reminded me of how we used to evaluate models in classic machine learning. I knew this was exactly what we needed to maintain our high standards at enterprise scale"

Amit Huli

David Nicol

CTO - Productive Healthy Work Lives

Having evaluated numerous platforms, LangWatch was the only one that meaningfully resolved our quality gaps. The difference has been substantial

David Nicol

Lane Cunmmingham

VP engineering - GetGenetica - Flora AI

“LangWatch has brought us our monitoring and evaluations with an intuitive analytics dashboard. The Optimization Studio with DSPy brings the kind of progress we were hoping for as a partner."

Lane Cunmmingham

Kjeld O

AI Architect, Entropical AI agency

"I’ve seen a lot of LLMops tools and LangWatch is solving a problem that everyone building with AI will have when going to production. The best part is their product is so easy to use."

Kjeld O

Amit Huli

Head of AI - Roojoom

“When I saw LangWatch for the first time, it reminded me of how we used to evaluate models in classic machine learning. I knew this was exactly what we needed to maintain our high standards at enterprise scale"

Amit Huli

David Nicol

CTO - Productive Healthy Work Lives

Having evaluated numerous platforms, LangWatch was the only one that meaningfully resolved our quality gaps. The difference has been substantial

David Nicol

Lane Cunmmingham

VP engineering - GetGenetica - Flora AI

“LangWatch has brought us our monitoring and evaluations with an intuitive analytics dashboard. The Optimization Studio with DSPy brings the kind of progress we were hoping for as a partner."

Lane Cunmmingham

Kjeld O

AI Architect, Entropical AI agency

"I’ve seen a lot of LLMops tools and LangWatch is solving a problem that everyone building with AI will have when going to production. The best part is their product is so easy to use."

Kjeld O

Amit Huli

Head of AI - Roojoom

“When I saw LangWatch for the first time, it reminded me of how we used to evaluate models in classic machine learning. I knew this was exactly what we needed to maintain our high standards at enterprise scale"

Amit Huli

David Nicol

CTO - Productive Healthy Work Lives

Having evaluated numerous platforms, LangWatch was the only one that meaningfully resolved our quality gaps. The difference has been substantial

David Nicol

Lane Cunmmingham

VP engineering - GetGenetica - Flora AI

“LangWatch has brought us our monitoring and evaluations with an intuitive analytics dashboard. The Optimization Studio with DSPy brings the kind of progress we were hoping for as a partner."

Lane Cunmmingham

Kjeld O

AI Architect, Entropical AI agency

"I’ve seen a lot of LLMops tools and LangWatch is solving a problem that everyone building with AI will have when going to production. The best part is their product is so easy to use."

Kjeld O

Amit Huli

Head of AI - Roojoom

“When I saw LangWatch for the first time, it reminded me of how we used to evaluate models in classic machine learning. I knew this was exactly what we needed to maintain our high standards at enterprise scale"

Amit Huli

David Nicol

CTO - Productive Healthy Work Lives

Having evaluated numerous platforms, LangWatch was the only one that meaningfully resolved our quality gaps. The difference has been substantial

David Nicol

Lane Cunmmingham

VP engineering - GetGenetica - Flora AI

“LangWatch has brought us our monitoring and evaluations with an intuitive analytics dashboard. The Optimization Studio with DSPy brings the kind of progress we were hoping for as a partner."

Lane Cunmmingham

Kjeld O

AI Architect, Entropical AI agency

"I’ve seen a lot of LLMops tools and LangWatch is solving a problem that everyone building with AI will have when going to production. The best part is their product is so easy to use."

Kjeld O

Amit Huli

Head of AI - Roojoom

“When I saw LangWatch for the first time, it reminded me of how we used to evaluate models in classic machine learning. I knew this was exactly what we needed to maintain our high standards at enterprise scale"

Amit Huli

David Nicol

CTO - Productive Healthy Work Lives

Having evaluated numerous platforms, LangWatch was the only one that meaningfully resolved our quality gaps. The difference has been substantial

David Nicol

Lane Cunmmingham

VP engineering - GetGenetica - Flora AI

“LangWatch has brought us our monitoring and evaluations with an intuitive analytics dashboard. The Optimization Studio with DSPy brings the kind of progress we were hoping for as a partner."

Lane Cunmmingham

Kjeld O

AI Architect, Entropical AI agency

"I’ve seen a lot of LLMops tools and LangWatch is solving a problem that everyone building with AI will have when going to production. The best part is their product is so easy to use."

Kjeld O

Amit Huli

Head of AI - Roojoom

“When I saw LangWatch for the first time, it reminded me of how we used to evaluate models in classic machine learning. I knew this was exactly what we needed to maintain our high standards at enterprise scale"

Amit Huli

David Nicol

CTO - Productive Healthy Work Lives

Having evaluated numerous platforms, LangWatch was the only one that meaningfully resolved our quality gaps. The difference has been substantial

David Nicol

Lane Cunmmingham

VP engineering - GetGenetica - Flora AI

“LangWatch has brought us our monitoring and evaluations with an intuitive analytics dashboard. The Optimization Studio with DSPy brings the kind of progress we were hoping for as a partner."

Lane Cunmmingham

Kjeld O

AI Architect, Entropical AI agency

"I’ve seen a lot of LLMops tools and LangWatch is solving a problem that everyone building with AI will have when going to production. The best part is their product is so easy to use."

Kjeld O

Amit Huli

Head of AI - Roojoom

“When I saw LangWatch for the first time, it reminded me of how we used to evaluate models in classic machine learning. I knew this was exactly what we needed to maintain our high standards at enterprise scale"

Amit Huli

David Nicol

CTO - Productive Healthy Work Lives

Having evaluated numerous platforms, LangWatch was the only one that meaningfully resolved our quality gaps. The difference has been substantial

David Nicol

Lane Cunmmingham

VP engineering - GetGenetica - Flora AI

“LangWatch has brought us our monitoring and evaluations with an intuitive analytics dashboard. The Optimization Studio with DSPy brings the kind of progress we were hoping for as a partner."

Lane Cunmmingham

Kjeld O

AI Architect, Entropical AI agency

"I’ve seen a lot of LLMops tools and LangWatch is solving a problem that everyone building with AI will have when going to production. The best part is their product is so easy to use."

Kjeld O

Amit Huli

Head of AI - Roojoom

“When I saw LangWatch for the first time, it reminded me of how we used to evaluate models in classic machine learning. I knew this was exactly what we needed to maintain our high standards at enterprise scale"

Amit Huli

David Nicol

CTO - Productive Healthy Work Lives

Having evaluated numerous platforms, LangWatch was the only one that meaningfully resolved our quality gaps. The difference has been substantial

David Nicol

Lane Cunmmingham

VP engineering - GetGenetica - Flora AI

“LangWatch has brought us our monitoring and evaluations with an intuitive analytics dashboard. The Optimization Studio with DSPy brings the kind of progress we were hoping for as a partner."

Lane Cunmmingham

Kjeld O

AI Architect, Entropical AI agency

"I’ve seen a lot of LLMops tools and LangWatch is solving a problem that everyone building with AI will have when going to production. The best part is their product is so easy to use."

Kjeld O

Amit Huli

Head of AI - Roojoom

“When I saw LangWatch for the first time, it reminded me of how we used to evaluate models in classic machine learning. I knew this was exactly what we needed to maintain our high standards at enterprise scale"

Amit Huli

David Nicol

CTO - Productive Healthy Work Lives

Having evaluated numerous platforms, LangWatch was the only one that meaningfully resolved our quality gaps. The difference has been substantial

David Nicol

Lane Cunmmingham

VP engineering - GetGenetica - Flora AI

“LangWatch has brought us our monitoring and evaluations with an intuitive analytics dashboard. The Optimization Studio with DSPy brings the kind of progress we were hoping for as a partner."

Lane Cunmmingham

Kjeld O

AI Architect, Entropical AI agency

"I’ve seen a lot of LLMops tools and LangWatch is solving a problem that everyone building with AI will have when going to production. The best part is their product is so easy to use."

Kjeld O

Amit Huli

Head of AI - Roojoom

“When I saw LangWatch for the first time, it reminded me of how we used to evaluate models in classic machine learning. I knew this was exactly what we needed to maintain our high standards at enterprise scale"

Amit Huli

David Nicol

CTO - Productive Healthy Work Lives

Having evaluated numerous platforms, LangWatch was the only one that meaningfully resolved our quality gaps. The difference has been substantial

David Nicol

Lane Cunmmingham

VP engineering - GetGenetica - Flora AI

“LangWatch has brought us our monitoring and evaluations with an intuitive analytics dashboard. The Optimization Studio with DSPy brings the kind of progress we were hoping for as a partner."

Lane Cunmmingham

Kjeld O

AI Architect, Entropical AI agency

"I’ve seen a lot of LLMops tools and LangWatch is solving a problem that everyone building with AI will have when going to production. The best part is their product is so easy to use."

Kjeld O

Amit Huli

Head of AI - Roojoom

“When I saw LangWatch for the first time, it reminded me of how we used to evaluate models in classic machine learning. I knew this was exactly what we needed to maintain our high standards at enterprise scale"

Amit Huli

David Nicol

CTO - Productive Healthy Work Lives

Having evaluated numerous platforms, LangWatch was the only one that meaningfully resolved our quality gaps. The difference has been substantial

David Nicol

Lane Cunmmingham

VP engineering - GetGenetica - Flora AI

“LangWatch has brought us our monitoring and evaluations with an intuitive analytics dashboard. The Optimization Studio with DSPy brings the kind of progress we were hoping for as a partner."

Lane Cunmmingham

Kjeld O

AI Architect, Entropical AI agency

"I’ve seen a lot of LLMops tools and LangWatch is solving a problem that everyone building with AI will have when going to production. The best part is their product is so easy to use."

Kjeld O

Amit Huli

Head of AI - Roojoom

“When I saw LangWatch for the first time, it reminded me of how we used to evaluate models in classic machine learning. I knew this was exactly what we needed to maintain our high standards at enterprise scale"

Amit Huli

David Nicol

CTO - Productive Healthy Work Lives

Having evaluated numerous platforms, LangWatch was the only one that meaningfully resolved our quality gaps. The difference has been substantial

David Nicol

Lane Cunmmingham

VP engineering - GetGenetica - Flora AI

“LangWatch has brought us our monitoring and evaluations with an intuitive analytics dashboard. The Optimization Studio with DSPy brings the kind of progress we were hoping for as a partner."

Lane Cunmmingham

Kjeld O

AI Architect, Entropical AI agency

"I’ve seen a lot of LLMops tools and LangWatch is solving a problem that everyone building with AI will have when going to production. The best part is their product is so easy to use."

Kjeld O

Enterprise-grade controls:

Your data, your rules

Enterprise-grade controls:

Your data, your rules

On-prem, VPC, air-gapped or hybrid

ISO27001, SOC2 certified. GDPR controlled

Role-based

access controls

Use custom models

& integrate via API

FAQ

Frequently Asked Questions

Frequently Asked Questions

How does LangWatch work?

How does LangWatch work?

How does LangWatch work?

What is LLM observability?

What is LLM observability?

What is LLM observability?

What are LLM evaluations?

What are LLM evaluations?

What are LLM evaluations?

Is LangWatch self-hosted available?

Is LangWatch self-hosted available?

Is LangWatch self-hosted available?

How does LangWatch compare to Langfuse or LangSmith?

How does LangWatch compare to Langfuse or LangSmith?

How does LangWatch compare to Langfuse or LangSmith?

What models and frameworks does LangWatch support and how do I integrate?

What models and frameworks does LangWatch support and how do I integrate?

What models and frameworks does LangWatch support and how do I integrate?

Can I try LangWatch for free?

Can I try LangWatch for free?

Can I try LangWatch for free?

How does LangWatch handle security and compliance?

How does LangWatch handle security and compliance?

How does LangWatch handle security and compliance?

How can I contribute to the project?

How can I contribute to the project?

How can I contribute to the project?

Ship agents with confidence, not crossed fingers

Get up and running with LangWatch in as little as 5 minutes.

Ship agents with confidence, not crossed fingers

Get up and running with LangWatch in as little as 5 minutes.

Ship agents with confidence, not crossed fingers

Get up and running with LangWatch in as little as 5 minutes.

Resources

Integrations

Resources

Integrations

Resources

Integrations